Low-Resource NLP in the Era of LLMs - Introduction

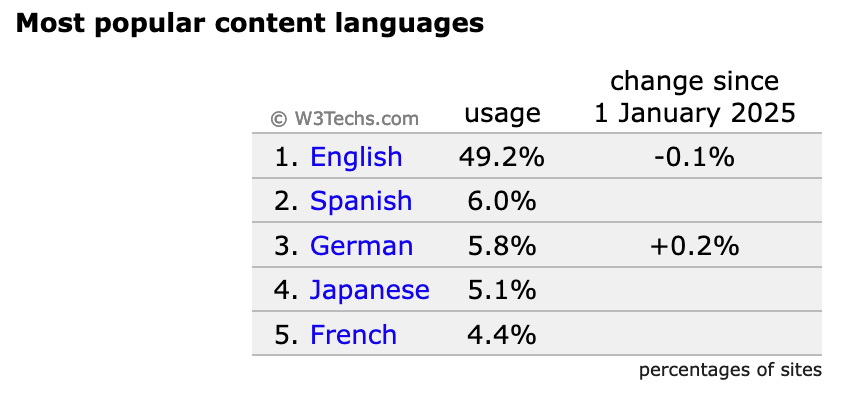

There has, undoubtedly, been drastic shifts in the landscape of Natural Language Processing (NLP) research and development with the breakthrough of Large Language Models (LLMs) like ChatGPT. However the majority of LLMs are optimized for a few high-resource languages such as English. This is because these LLMs are pretrained with large corpora of text from the internet and the digital footprint for a select few languages is significantly higher than the majority. According to the W3Tech Surveys just five languages (English, Spanish, German, Japanese and French) account for over two-thirds of the content in web.

One thing to note is that this digital divide is not representative of the global distribution of language speakers. For example, Mandarin Chinese has over 1.1 billion speakers globally but is not even among the top 5 languages for web content, while English with approximately 1.5 billion speakers accounts for nearly half of all web content. The digital world is heavily skewed towards Western European languages: among the top 5 content languages, Japanese is the only non-Western European language.

This is the first post in my “Low-Resource NLP in the era of LLMs” series. In this post, we will introduce challenges in developing NLP systems for low-resource languages and opportunities in the space with the emergence of LLMs. In the subsequent posts, I will focus on hands-on experimentation to apply LLMs to different part of workflow for various low-resource NLP tasks.

Before jumping into the topics, let’s talk a bit more about low-resources languages and why we care about developing LLMs for these languages.

The Six Kinds of Languages

Joshi et al., 2020 defines six classes of languages from 0 to 5 based on their resource availability for NLP tasks with 0 or “The Left-Behinds” being the class languages with exceptionally limited data and 5 or “The Winners“ being the ones with dominant online presence, have massive corporate and government investments in the development language technologies. While Class 0 languages benefit the least the from the LLM breakthrough and the author claim that “it is probably impossible to lift them up in the digital space.”

The following figure shows the six classes of languages based on their resource availability for NLP tasks:

While the Class 0 or the The Left-Behinds practically have no unlabelled or labelled data, it consists of the largest section of languages and represents 15% of all speakers across classes. This means that even with LLMs and the recent breakthroughs in NLP, this disparity in the language resource distribution pose a difficult challenge for global AI adoption.

Additionally, the absence of high quality NLP systems for low-resource languages extends far beyond the missed opportunities for broader global AI adoption. The impact of globalization and the dominance of a few high-resource languages often lead to continued marginalization and, in some cases, even disappearance of some languages in the digital space.

The Data Gap

Clearly the availability of data, both labelled and unlabelled, is one of the most critical challenges when developing LLMs/NLP systems for low-resource languages. In fact, it is hard to even evaluate these models for quality as high quality evaluation data is often unavailable for some of these languages.

This however, is a complex cycle where we need data to develop LLMs/NLP systems; but if these systems don’t support a language, the chance of reduced use of the language in the digital space increases, and hence decreasing the overall data available for model training. Disrupting this cycle will take a substantial amount of effort from multiple stakeholders, including language experts and domain experts. Since collecting high quality data is expensive, it requires acquisition of data from a wide range of sources/domains, inspection, cleaning, and refinement of the data.

Approaches to bridging this data gap involve crowdsourcing, mining the web, and data augmentation using techniques like repetition of blocks of text, minor editing of text, or back-translation. With the emergence of LLMs, more recent approaches seem to leverage inherent abilities of LLMs to bridge the data as well as the performance gaps for low-resource languages.

Leveraging LLMs for Low-Resource Languages

LLMs pre-trained on large corpora of multilingual texts display multilingual abilities, whereby these models can understand and generated texts in multiple languages. These models also exhibit cross-lingual transferabilities, which means they are able to transfer knowledge learned during training on one language to improve on tasks in another.

Although it is often associated with pre-trained models, transfer learning is not a recent concept - it has been an actively researched area since as early as 1995. In the low-resource context, high-resource languages/domain has been leveraged to transfer and improve performance on low-resource languages/domain. Recent works have demonstrated strategies like multilingual pre-training (Conneau et al., 2020, Xue et al., 2020, Big Science BLOOM) and cross-lingual alignment (Tanwar et al., 2023), which help improve LLMs on tasks across multiple languages - including low resource languages. Various Parameter Efficient Fine-Tuning (PEFT) methods (Lester et al., 2021, Hu et al., 2021, Liu et al., 2022, Liu et al., 2023) have also emerged as a potential solution allowing effective fine-tuning of models with less amounts of labelled data.

Low-Rank Adaptation of LLaMA 3 for Nepali and Hindi

The space of open-source and open-weights Large Language Models (LLMs) is growing and it is great news for practitioners, researchers and consumers of these advanced AI models. Now, individuals and organizations, who otherwise have limited financial resources to cover the substantial costs associated with pre-training

Read one of my previous post on Low-Rank Adaptation of LLaMA 2 for Nepali and Hindi where we discuss different Parameter Efficient Fine-Tuning (PEFT) techniques and share results from fine-tuning LLaMA 3 for Nepali and Hindi, two South Asian languages, one of which is a high-resource language and another which is a low-resource language.

In addition to improving the model itself for low-resource language, LLMs are also being used to enrich various other parts of the NLP pipeline. Existing research has shown LLMs are few and zero (Wu and Dredze et al., 2019, Brown et al., 2020, Lin et al., 2022) shot learners. This ability seems to provide oppotunity to brigde the data gap by using LLMs to generate synthetic data (Schick et al., 2021, Meng et al., 2022) in low-resource languages. Recently, LLMs are being used as a judge to evaluate model output (Zheng et al., 2023), a similar set up can be used in low-resource settings to speed up both data creation and model evaluation.

Conclusion

Clearly the digital divide between the high and low resource languages is vast and this divide has led to lack of robust and high perfoming NLP systems and LLMs for low-resource lanuguages. A large number of languages around the globe continue to be under-represented in the web and in LLMs. Most LLMs that we have today are optimized for a selective set of high-resources languages and perform poorly on low-resource languages. Recent studies have found several strategies to enrich the LLMs and support the NLP R&D for low-resource language by multilingual pretraining of LLMs; and in fact, leveraging LLMs and their multilingual, cross-lingual, and zero/few shot abilities. However, synthetic data generated from LLMs is not enough and we need to foster local efforts in data sourcing at scale.

What Next in This Series?

There is limited research dedicated to low-resource NLP and LLMs. There is still a lack of clarity on how much we can achieve with just LLMs. Although synthetic data could help with the research for low-resource NLP and LLMs, it will not capture nuances specific to different languages well. This means that for many use-cases where these nuances matter, NLP systems and LLMs will hit the preformance ceiling soon, and the accuracy of the models will not be good enough for real-world use.

In the new chapters, we will work with examples to apply LLMs at different stages of the NLP pipeline, and understand what problems we can solve in the process. We will mostly likely rely on transfer learning and PEFT methods to develop tools and resources for Nepali - a low resource language.