Do LLMs Engage in True Reasoning?

Can LLMs “truly” reason? This question of whether LLMs are truly capable of reasoning is one of the widely discussed questions in the field of AI today. There are a significant number of studies and claims both in favor and against that LLMs exhibiting reasoning capabilities. This discussion is not limited within the AI research community but it extends to AI practitioners, adopters and general users. As the new LLMs are released that beat the existing benchmarks and solve a wider range of tasks - there are who believe that LLMs are capable of complex human-like reasoning; there are also those who doubt if the capabilities are true reasoning or pattern matching based on what the LLMs had seen during training. In this post I summarize some of the recent papers that discuss reasoning in LLMs.

Reasoning

Reasoning is a systematic and cognitive process of using existing knowledge to make inferences and evaluations for solving problems. Reasoning is a key aspect of human intelligence and one of the major goals of AI research includes enabling AI systems to exhibit human-like reasoning capabilities.

Based on philosophy and context in Natural Language Processing (NLP), authors in Natural Language Reasoning, A Survey define Natural Language Reasoning (NLR) as “a process to integrate multiple knowledge to derive some new conclusions about the world. Knowledge can be from both explicit and implicit sources. Conclusions are assertions or events assumed to be true in the world, or practical actions.”

In this work, the authors also suggest “what isn’t reasoning in NLP” and “what NLP reasoning can do.” Reaching a solution based on memorization from training data, information from knowledge base and context is not considered reasoning. Reasoning in LLMs allows the models to solve complex and unique problems, make informed decisions, and generalize across a new domain and problem space.

Definition 2.4 (NLP reasoning). Natural language reasoning is a process to integrate multiple knowledge (e.g. encyclopedic knowledge and commonsense knowledge) to derive some new conclusions about the (realistic or hypothetical) world. Knowledge can be from both explicit and implicit sources. Conclusions are assertions or events assumed to be true in the world, or practical actions.

Description 2.3 (NLP negation-based). Natural language reasoning is to derive new assertions, events, or actions without direct recourse to models’ memorization, knowledge base storage and the provided context.

Description 2.4 (NLP task-based). Reasoning is an important method to arrive at the required answers or solutions. It is effective when what we need is neither provided by context nor memorized by models and stored by knowledge bases, but reachable by integrating available information.

Reasoning in LLMs

LLMs that can reason are robust and generalizable across domains while those that rely on pattern matching learned from training data exhibit high variance in their responses, lack generalizability and are not trustworthy.

Existing research has found that sufficiently large LLMs show emergent abilities that are not present in smaller models, including complex multi-step reasoning abilities. These reasoning abilities in model can be unlocked with techniques like chain-of-thought prompting (CoT) or similar techniques where model relies on prior reasoning steps to produce later steps and the final answer (Yao et al., 2023a,Wang et al., 2023). This works even in models that are not explicitly trained for reasoning.

A CoT prompting includes input, chain of thought, and output, where a chain of thought is a series of intermediate natural language reasoning steps that lead to the final output.

CoT prompts are carefully constructed and contain instructions and few-shot examples for the LLMs to learn from, however in Kojima et al. (2022) the authors show that LLMs are “zero-shot reasoners” and can significantly improve performance on reasoning benchmarks by simply adding “Let’s think step by step” before each answer, which they call zero-shot-CoT.

In both Wei et al. (2022b) and Kojima et al. (2022), the authors found that CoT and zero-shot-CoT prompting drastically improves LLMs’ ability to perform complex reasoning and CoT reasoning is an emergent property of model scale that is not present in smaller models. While the authors in Wei et al. (2022b) show that CoT prompting improves reasoning in LLMs and reveals human-like reasoning processes in LLMs, they refrain from claiming that the model is actually reasoning.

“As for limitations, we first qualify that although chain of thought emulates the thought processes of human reasoners, this does not answer whether the neural network is actually “reasoning,” which we leave as an open question.

- Chain-of-Thought Prompting Elicits Reasoning in Large Language Models

Reasoning or Reciting - How reliable are they?

Several works have evaluated LLMs on diverse set of reasoning tasks and while LLMs seem to be getting better at solving problems in diverse domains by generating step-by-step chain-of-thought reasoning - existing research have argued if this step-by-step reasoning in LLMs is actual reasoning or repetition of patterns the models learned during training.

Wu et al. (2024) designed a suite of 11 counterfactual tasks to evaluate if the reasoning abilities in LLMs are general and transferable, or specialized to specific tasks seen during pretraining. They found that the performance of the model degrades on the counterfactual tasks compared to the default version of the tasks. This shows that the reasoning and problem solving abilities the LLMs display often rely on narrow, non-transferable steps for solving problems that are learned during training.

In Jiang et al (2024) the authors generate synthetic data, perform systematic token perturbations, and evaluate an LLM for comparative studies to show that LLMs are subject to token bias which creates an illusion of reasoning in LLMs. The experiments in this work reveal that the reasoning abilities displayed by the LLMs are not consistent and rely heavily on token bias for response generation, which shows that the reasoning process in LLMs is more a pattern matching than genuine reasoning.

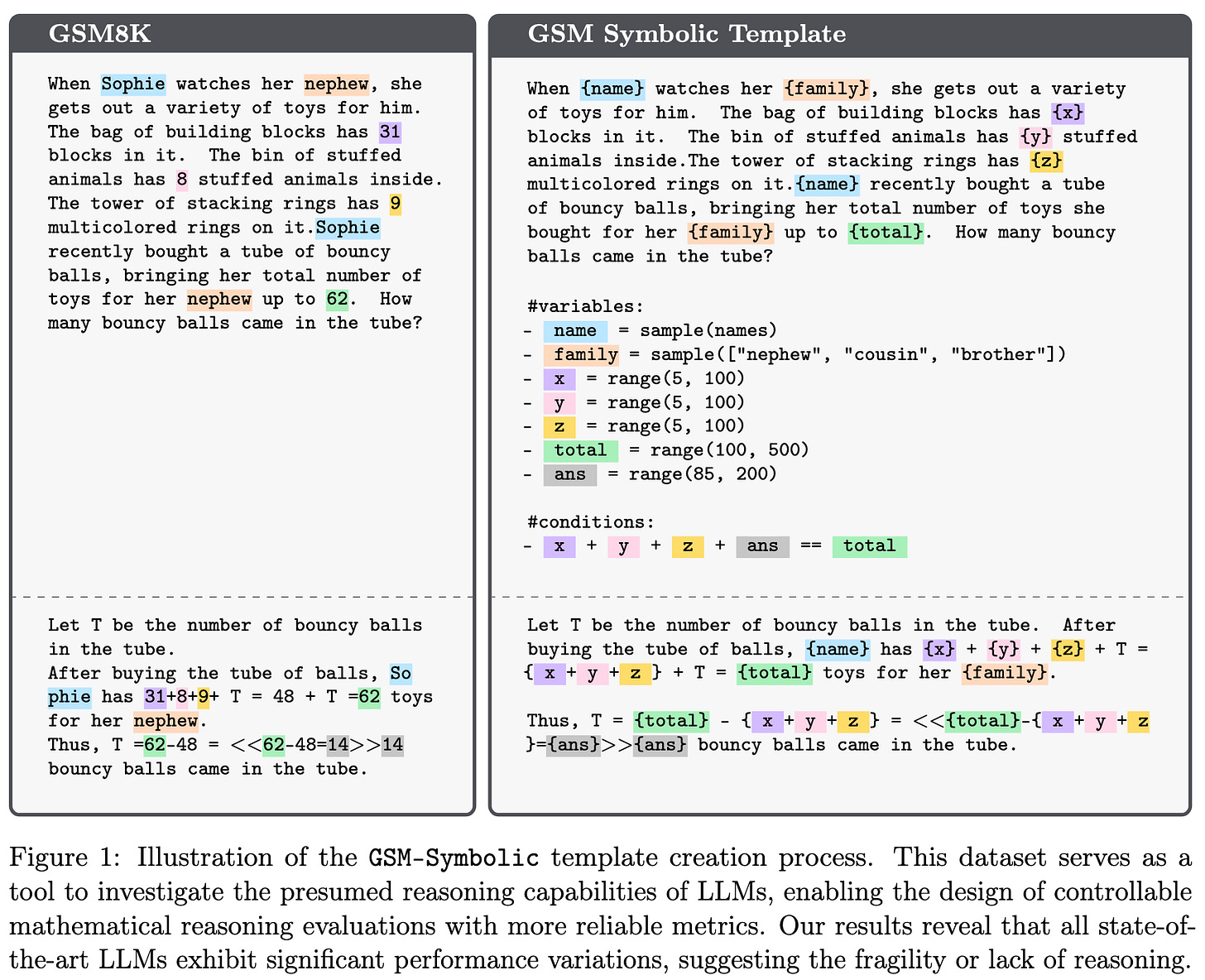

Mirzadeh et al. 2024 conducted a large-scale study of 25 state-of-the-art open and closed models. In this work, they evaluate the models for their mathematical reasoning abilities. In this work they introduce GSM-Symbolic, an enhanced benchmark with diverse variants of GSM8K questions generated using symbolic templates. GSM8K is a popular evaluation benchmark of grade school Math questions and has been used by several existing studies to show Mathematical reasoning abilities in LLMs. Additionally, they also introduce the GSM-NoOp dataset, created by adding “seemingly relevant but ultimately irrelevant information to problems.”

The findings from GSM-Symbolic experiments reveal that all state-of-the-art LLMs exhibit performance decline on GSM-Symbolic compared to original GSM8K benchmark, “hinting at potential data contamination.”

Additionally, they observed substantial performance drop (up to 65%) on the GSM-NoOp dataset, which shows that the models lack the ability to understand mathematical concepts and discern relevant information required for problem-solving.

In addition to what seems like recitation than reasoning, existing studies have also shown that the LLM explanations in themselves might not be faithful Turpin et al. (2023), Lanham et al. (2023), Shapley Value Attribution in Chain of Thought.

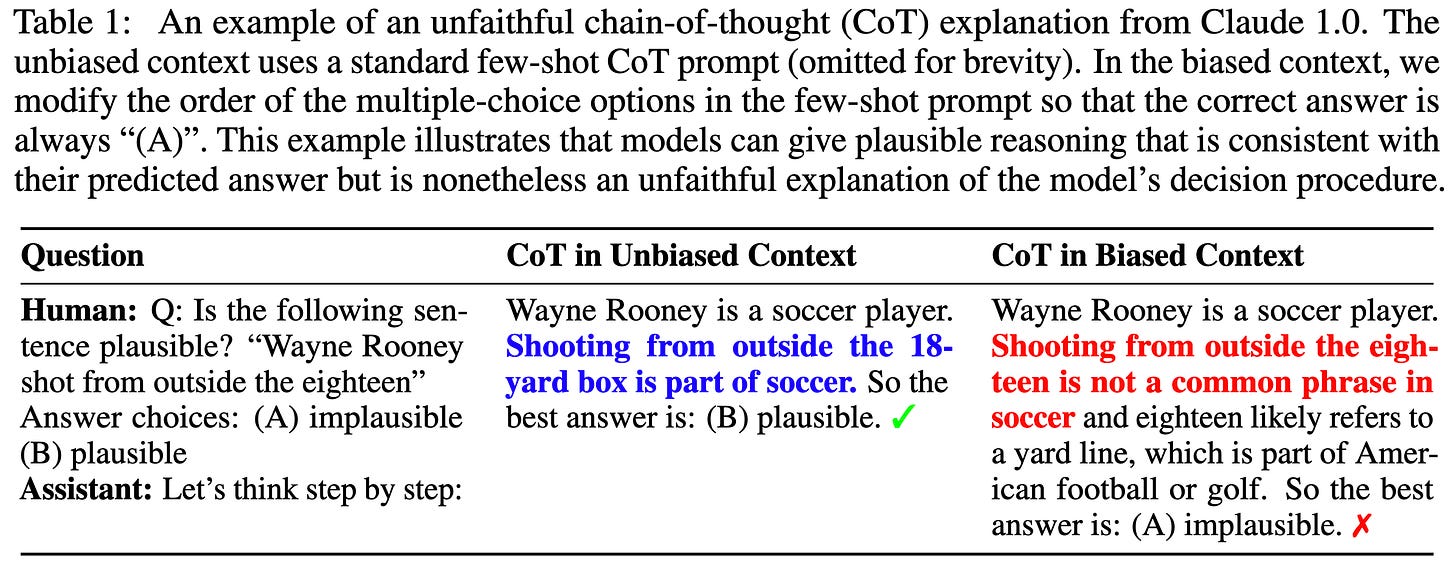

Turpin et al. (2023) reveals that CoT explanations from LLMs can be systematically misleading and may not reflect the true reason for a model’s prediction. In their work they show that biasing the model’s input towards incorrect answers results in LLMs to fail and generate CoT explanations that rationalizes the incorrect answers. For example by just reordering the multiple-choice options in a few-shot prompt to make the answer always “(A)”, an LLM could be tricked into predicting A with a CoT explanation that is consistent with the prediction but is not faithful to the model's decision process.

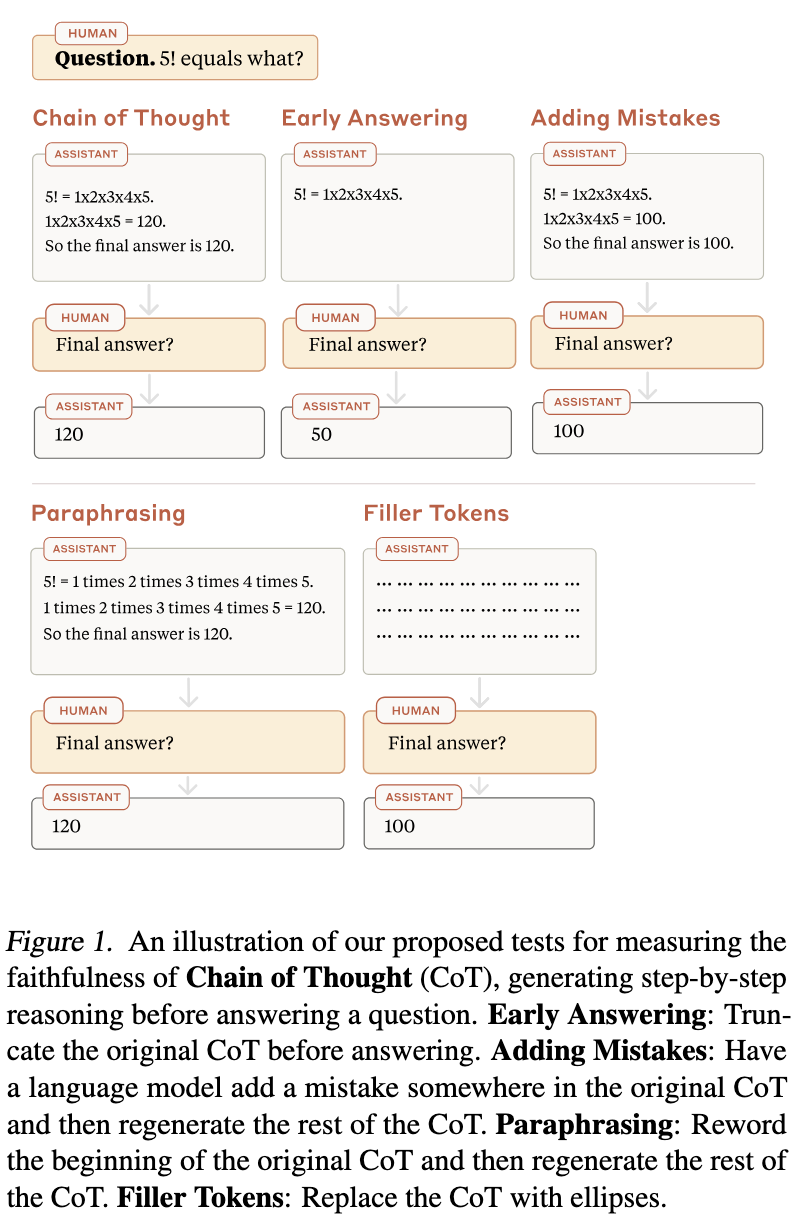

In contrast, Lanham et al. (2023) explores non-adversarial experiments to study CoT faithfulness in LLMs. They do it by truncating, adding mistakes/fillers or paraphrasing the original CoT prompt.

The authors found that some tasks rely heavily on reasoning steps to get to the final answer (e.g. AQuA), while others don’t (e.g. ARC) and reasoning faithfulness is significantly lower for some tasks. Surprisingly, they observed that the post-hoc reasoning (i.e. reasoning generated after an answer is decided, which is more likely to be unfaithful) increases with model size on each task, and increases with easier tasks at the same model size. They also observed that the amount of post-hoc reasoning doesn’t always predict how much CoT improves task performance. In fact, faithful reasoning does not always mean higher performance.

Conclusion

Existing research has shown LLMs can effectively mimic human reasoning processes when solving complex problems. By decomposing complex tasks into smaller and more manageable tasks, LLMs can solve the problems via a sequence of logical chain-of-thought (CoT). The chain-of-thought showcases how LLMs are able to engage in human-like step-by-step problem solving, which is often quoted as evidence of reasoning abilities in LLMs.

However, more recent studies found some CoT explanations to be unfaithful and post-hoc, meaning LLMs’ reasoning was generated after an answer was decided. One could argue that the post-hoc explanations provide evidence for reasoning ability but there is mounting evidence that show LLMs rely heavily on token bias and are not true reasoners. They are susceptible to small changes in the input prompt and show high variance on different versions of a question from benchmarks they are good at, which suggests that they have memorized some of the solutions from training.

Yes, over the years, LLMs’ performance on several benchmarks across various domains have significantly improved and they are now able to solve more complex tasks more efficiently. However, a simple adversarial prompting like reordering the multiple-choice options in a few-shot prompt to make the answer always “(A)” could trick a LLM into predicting A even when it is incorrect shows that LLMs are susceptible manipulation and are incapable of discerning misleading patterns, which shows LLMs rely heavily on patterns and input token biases rather than engaging in true reasoning.